ACRO member companies have compiled a series of case studies that demonstrate how centralized monitoring identifies critical issues more quickly and effectively than traditional on-site monitoring methods. This is the first post in a series highlighting centralized monitoring at work. Check back for more examples of how centralized monitoring has benefited ACRO member companies and their clients.

Case Study 1: Early Detection of Adverse Event Underreporting

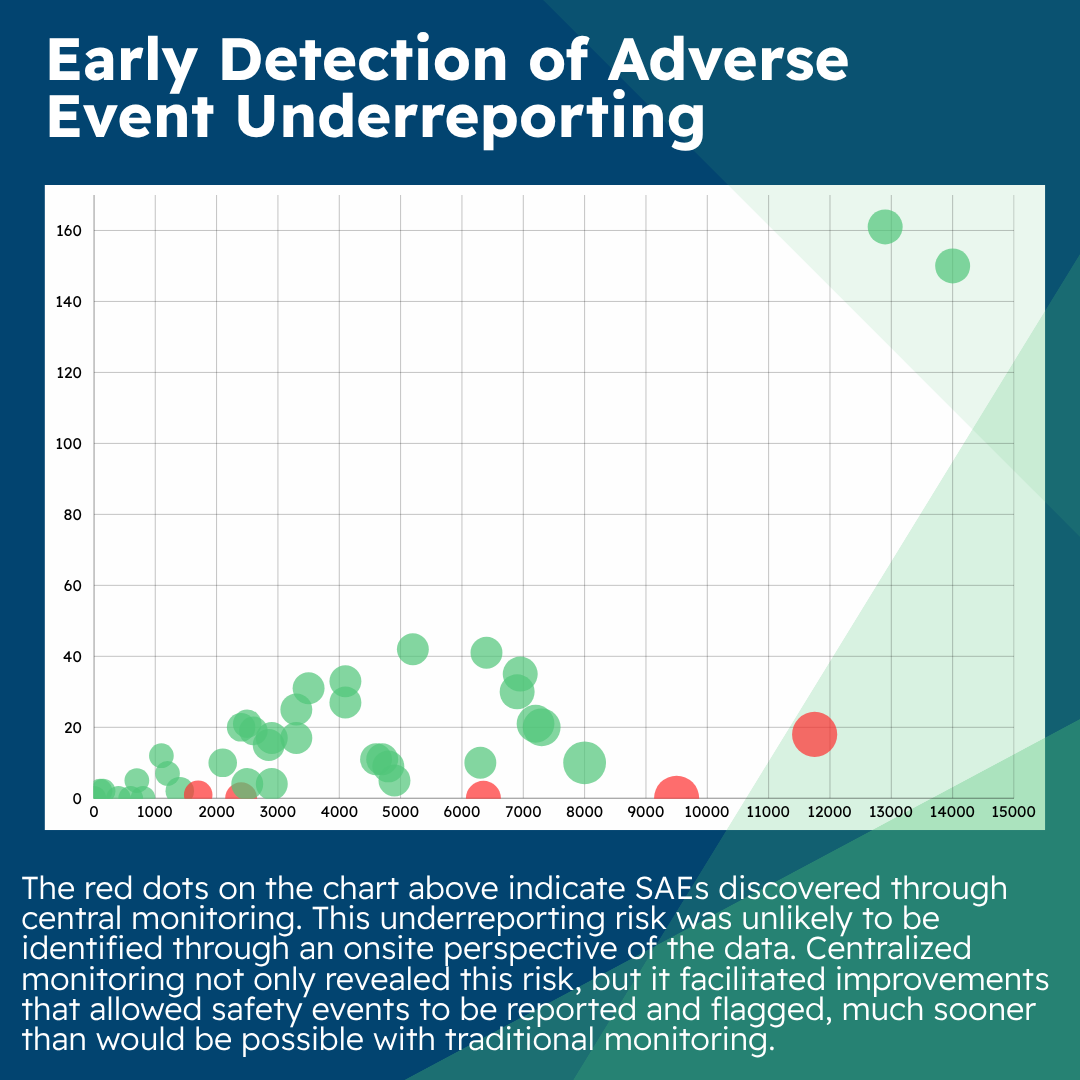

A periodic review of key risk indicators (KRIs) was conducted across several sites as part of centralized monitoring deliverables for a Phase II oncology study. As a result of this review, emerging risks related to lower-than-average adverse event (AE)/serious adverse event (SAE) reporting were identified and triggered further analysis by the central monitor.

To mitigate the impact of this underreporting risk, the central monitor identified issues for the clinical research associate (CRA) to take action:

- Ensure site staff understood the safety data reporting requirements,

- Engage the principal investigator (PI) onsite to ensure that medical records provided clear evidence that a safety assessment had been conducted during study visits/contacts, and

- extend targeted source data review (SDR) during the next onsite visit to identify potential underreported AEs.

These mitigation actions deployed as a result of centralized monitoring resulted in the identification of safety events (six AEs and one SAE) at subsequent participant visits and the improvement of site processes with support from the CRA and site staff.

It’s important to note that this underreporting risk was unlikely to be identified through an onsite perspective of the data. Centralized monitoring not only revealed this risk but it also facilitated improvements that allowed safety events to be reported and flagged much sooner than would be possible with traditional monitoring.

Case Study 2: Rapid Detection of Unreported Serious Adverse Events

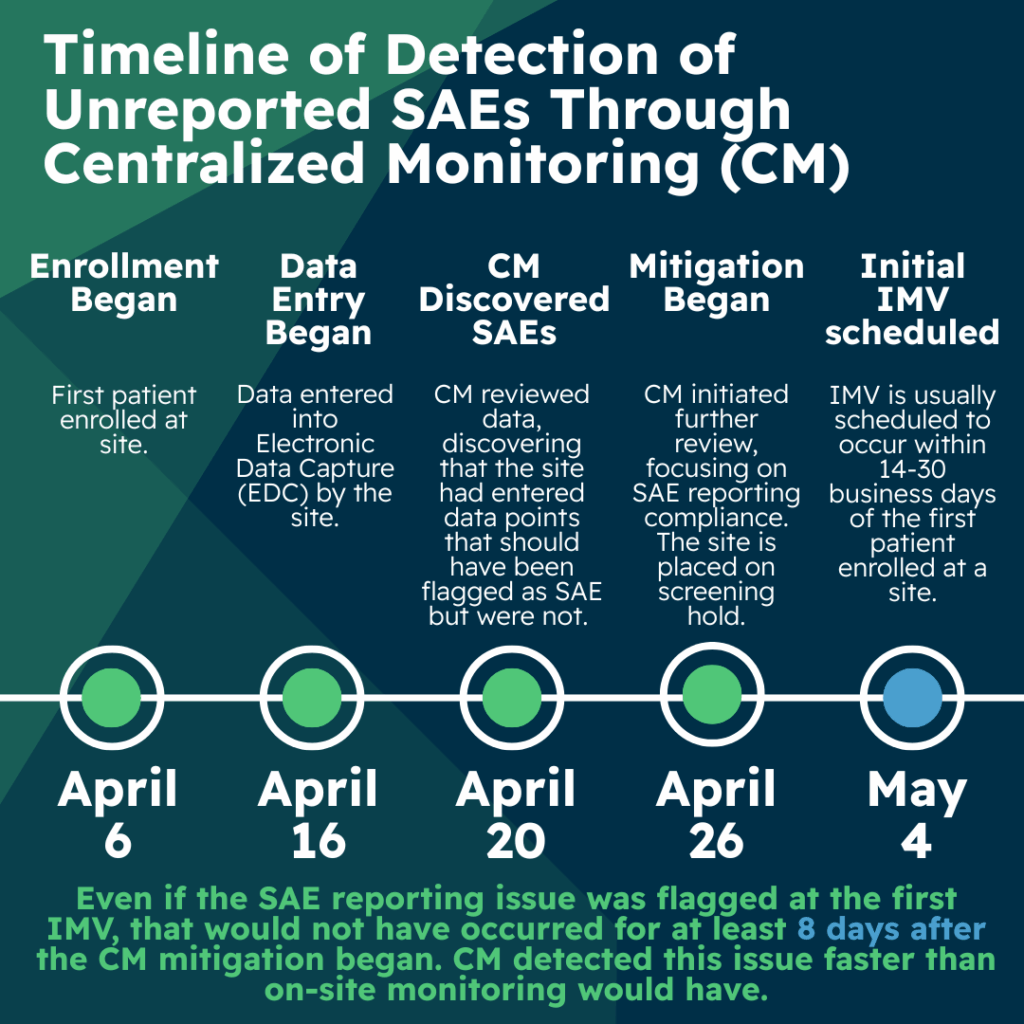

A recent Phase 3 oncology trial illustrates how central monitoring can be used to identify unreported serious adverse events (SAEs) and to encourage timely data entry by the site. In this study, sites were encouraged to enter data into electronic data capture (EDC) within three days of any patient visit, and central monitors were required to review data on a patient-by-patient basis within three days of data entry by the site.

On April 6, 2025, the first patient was enrolled at this site. Data was entered by the site on April 16, and on April 20, central monitoring reviewed EDC data and discovered that the site had entered a conglomeration of symptoms and a hospitalization that was not flagged as an SAE. The SAE was also not reported to either pharmacovigilance or the institutional review board (IRB). An additional eleven patients were enrolled at this site in quick succession and due to the unreported SAE finding, central monitoring initiated a further review for all patients at this site focused on SAE reporting compliance.

Upon review, an additional six SAEs at the site were identified which had not been reported. This issue was escalated and discussed with the appropriate team members and the sponsor. Together, the sponsor and CRO agreed to put the site on a screening hold until they could investigate their process failures and implement corrective and preventative actions.

The discovery of these unreported SAEs preceded the initial interim monitoring visit (IMV) by several weeks. The IMV is often scheduled to occur within 14-30 business days of the first patient enrolled at a site. In this case, the first IMV was scheduled for May 4. Even if the first IMV would have been scheduled for April 20, it can be challenging for the clinical research associate (CRA) to ensure additional time onsite to review data for additional patients upon such short notice of first IMV. Given this time constraint, there is a strong likelihood that the CRA might not have been able to identify these issues to the full extent during that first IMV.

An added benefit of central monitoring is improved site compliance with data entry timelines. Because sites understand that central monitors review data in near real time to help identify errors and issues before they are repeated by the site, sites tend to be more compliant with data entry requirements instead of waiting to enter data just before an onsite IMV.

Because of this early identification of the issue, the CRA met with the principal investigator (PI) and other site staff prior to the IMV and discussed protocol, good clinical practice, and IRB reporting requirements. In addition, the project team updated the EDC system to program additional SAE alerts to mitigate future errors at other sites as well.

This case study highlights the timeliness of centralized monitoring reviews as compared to what would be expected with the normal cadence of IMVs.

Case Study 3: Flagging Data Quality Risks at a Specific Site

Finally, this case study illustrates how centralized monitoring supported early identification of data quality risks across three different primary endpoints in a Phase II analgesia study. In this study, central monitors were to review data on a patient-by-patient basis within three days of site data entry and monthly for trends at a given site. Data managers look at trends across the study on a semi-annual basis, specifically lab reconciliation.

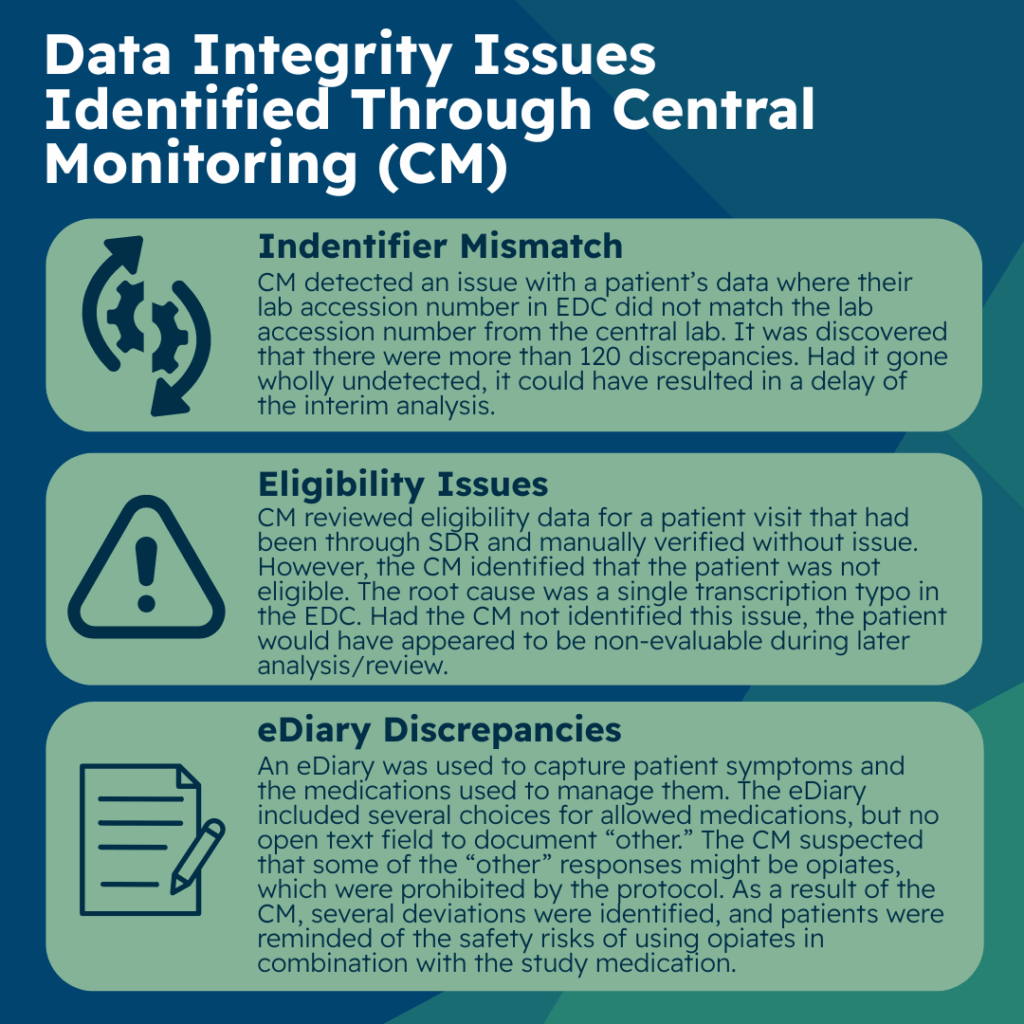

The first of three data integrity issues identified by the central monitor involved discrepancies between the lab accession number or unique identifier from the central lab and the site-recorded lab accession number in electronic data collection (EDC). In this case, the central monitor detected an issue with a patient’s data where their lab accession number in EDC did not match the lab accession number in the data coming directly from the central lab.

Upon further investigation, they discovered this was a more widespread issue that had impacted more sites and patients. They alerted the project manager and data management team leaders that there were more than 120 such discrepancies. This larger issue might not have been identified through data management reconciliation or resolved until later in the trial. Had it gone undetected, it could have resulted in delay of the interim analysis.

In the second of the three data integrity issues, the central monitor reviewed eligibility data for a patient visit that had already been source document reviewed (SDR) and verified by the clinical research associate (CRA) with no issues identified. The central monitor, however, identified that, based on the data entered in EDC, the patient did not appear to be eligible. A query was raised and the site confirmed that there was a transcription error in EDC and made the correction.

When the CRA was alerted of the issue, they recognized that they overlooked the EDC transcription error by mistake. Upon root cause analysis, it was determined that while most of the time was spent confirming that the patient met eligibility requirements across all of the various sources of data (e.g., prior medical records, labs, scales and ratings, protocol procedures and baseline safety assessments), they failed to see a single transcription typo in the EDC. In addition, due to the complexity of logic across related criteria, this typo was undetectable via a programmed edit check in EDC.

Had the central monitor not identified this issue, the patient would have appeared to be non-evaluable during later analysis preparations by the statistics team, during medical review, or during sponsor review of the data much later. This example illustrates that transcription errors can be identified via centralized monitoring and are not always possible to identify during onsite SDR/SDV. It further highlights that even with thorough onsite SDR/SDV, transcription errors are hard to detect and are subject to human error due to the sheer volume and focus of their review of data using many different data sources.

The third and final of the three data integrity issues that centralized monitoring detected involves patient diary data. An electronic patient diary (eDiary) was used to capture symptoms the patient was experiencing, and which rescue strategies or medications patients used to manage their breakthrough pain symptoms.

The eDiary included several choices for allowed medications and an “other” option, but no open text field to document what “other” meant. Because this data was electronically captured, it did not require transcription into EDC. The sites and CRAs did not have much responsibility related to this diary data. The central monitors had several review steps as it related to trending of this data. One of the steps was to look for general trends such as signs of technology glitches, missing data, potential protocol deviations, inconsistent data, or overly homogenous data. During this review, the central monitor identified that some of the “other” responses on the eDiary might potentially be the use of opiates to control breakthrough pain, which was prohibited by the protocol.

Opiates were intentionally not included as an option as the design team did not want to give the impression that use of opiates were allowed in this trial. As a result of the central monitor’s identification, sites, and CRAs were asked to ensure that patients were questioned on their “other” responses so that any prohibited use of opiates could be documented appropriately as a protocol deviation. Several deviations were identified and documented, and sites and patients were reminded of the safety risks of using opiates in combination with the study medication in this trial.

Conclusion

The case studies presented here demonstrate the various (and sometimes unexpected) ways that centralized monitoring can play a significant role in both patient safety and data integrity. They further illustrate the close relationship central monitors must have in communicating with the CRAs and other project team leaders.